Cosmological modeling software reveals more than it was taught to reveal. Article on Medium by James Maynard (The Cosmic Companion)

“It’s like teaching image recognition software with lots of pictures of cats and dogs, but then it’s able to recognize elephants. Nobody knows how it does this, and it’s a great mystery to be solved,” stated Shirley Ho, group leader at the Flatiron Institute’s Center for Computational Astrophysics and adjunct professor at Carnegie Mellon University."

5 Likes

It’s frustrating to see such poor science journalism.

“It’s like teaching image recognition software with lots of pictures of cats and dogs, but then it’s able to recognize elephants. Nobody knows how it does this, and it’s a great mystery to be solved,” stated Shirley Ho, group leader at the Flatiron Institute’s Center for Computational Astrophysics and adjunct professor at Carnegie Mellon University.

Neural networks can make good predictions when trained with good data, but neural networks don’t offer explanations - its not a mystery - it’s a limitation of neural networks.

You lost me here, Dan… What are you saying and how does it pertain to the point of the article?

Neural networks have the capacity to make predictions beyond the data they are trained on, but they do not explain how the prediction is made. It’s a sort of Black Box model that can make predictions, but you don’t know what is going on inside the box to make those predictions. That’s an over-simplification - you can dive into the layers of the network are maybe discern what is happening, but it’s going to be difficult to describe.

Contrast this with a statistical model (a mathematical equation allowing for some random error), which can also make predictions, but might give very wrong answers if that model is incorrect. The model can be used to answer questions about how the predictions are formed. Statistical models are ultimately a choice by the person creating the model. Neural networks have no model and are trained by the data with no input from a human.

Neural networks are good at certain types of problems, like the one given in the article. The NN was able to do this without being given all the laws of physics related to dark matter, but it doesn’t tell us what those laws are. We already know them, of course, and it’s nifty the NN could do this without training. What I’m saying is that there really is no mystery here, it’s just a NN doing it’s job.

1 Like

I wish that I understood more about the subject matter here… it just seems odd that someone with the above title would not realize that this is to be expected. Her example is “teaching (it) to recognize cats and dogs, but then it is able to recognize elephants.” Is this analogy incorrect, then?

I think it’s a very poor analogy.

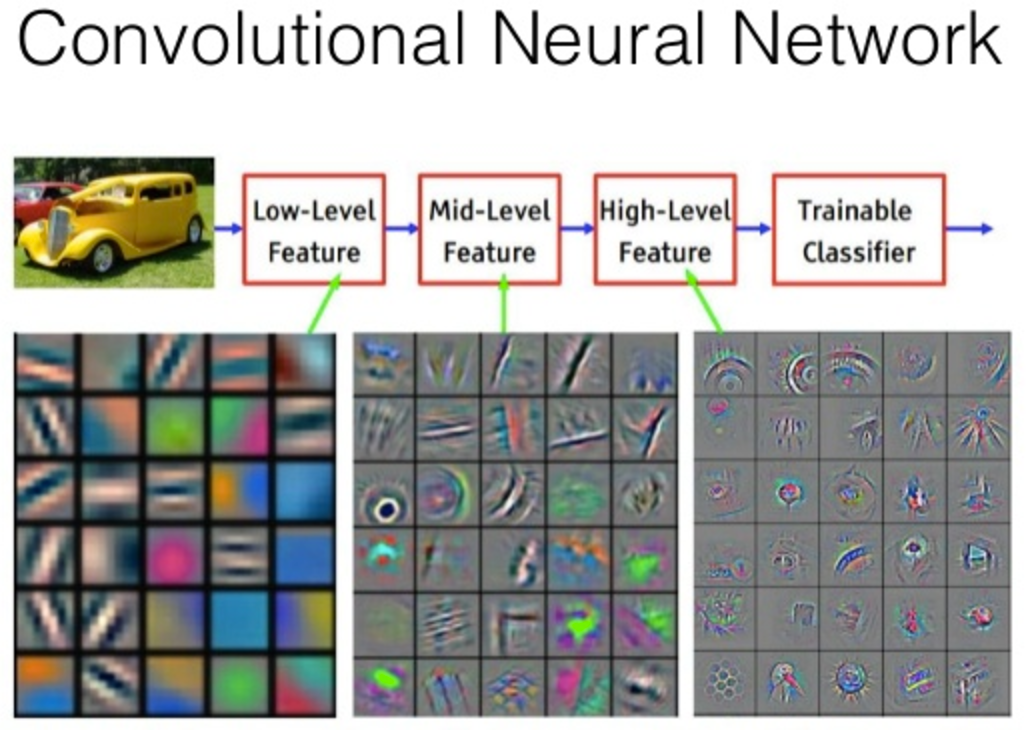

First, a glance at the original paper reveals that the neural network has a convolutional architecture. I.e., it’s a CNN. A CNN uses “representation learning” to discover, without human intervention, the features in the input data that are useful for making accurate predictions. These features typically involve some sort of transformation (filtering, aggregation, projection, etc.) to represent the input. And since it is a machine that has learned the representations, we humans often find the representations difficult to grasp. Here’s an example, of features that a CNN might use to detect an automobile:

In the course of performing its representation learning, the “Deep Density Displacement Model” seems to have discovered a mathematical representation of dark matter that is useful for predicting the evolution of large-scale structures. That’s why it is able to answer other questions–about dark matter effects–that it was not explicitly trained for, in my opinion.

Is this ability surprising? Yes, in the sense that it could not have been deterministically predicted in advance of the experiment. But no, in the sense of the surprising things neural networks have been able to do. A neural network trained to make personalized movie recommendations might make pretty good book recommendations, for example, provided that the book purchase data could be provided in an input format similar to the format used for movie rentals.

Does that provide a little clarity? Feel free to push back or ask questions.

Thanks,

Chris

3 Likes

Thanks Chris! That helps a lot… So, clarify if I’m wrong, unintended consequences occurring from work done on/by these neural networks are expected.

Her example:

… like teaching image recognition software with lots of pictures of cats and dogs, but then it’s able to recognize elephants

… is not surprising because elephants were recognized… maybe, to continue the same line of thought, it was surprising because elephants were recognized, and elephant recognition is really beneficial, or might, potentially have been a next-step in the advancement of this software?

Forget the elephants!

A better analogy would be to say that a CNN trained to recognize dogs and cats might also have some success in recognizing dog houses and cat beds because those structures are frequently in the background of the training images.

It’s not a perfect analogy, but it’s the best I can come up with in under 5 minutes.

Best,

Chris

3 Likes

Oh, thank God! I thought they’d start rising against us soon enough.

3 Likes

But it’s not understand why the model was able to do this, the way we understand that doghouses are likely to appear in images of dogs, correct? And we have learned that there is in fact some sort of ununderstood connection?

1 Like

I like that example, it gets right to the point. The NN might recognize doghouses because of the association with dogs. WE are not surprised that doghouses exist, but we might be surprised the NN learned this connection.

4 Likes